|

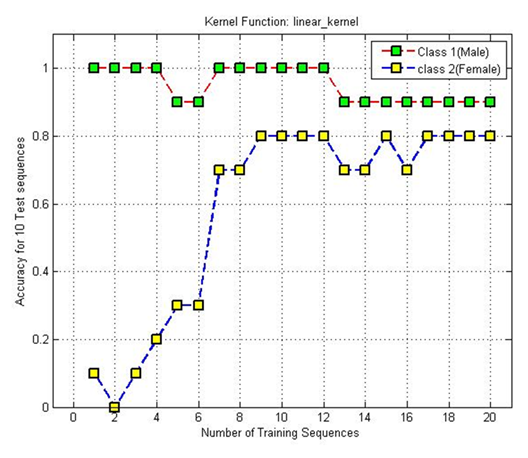

'Support vector machines (SVMs)' are a set of related supervised learning methods used for classification and regression. They belong to a family of generalized linear classifiers. A special property of SVMs is that they simultaneously minimize the empirical classification error and maximize the geometric margin; hence they are also known as maximum margin classifiers. Viewing the input data as two sets of vectors in an n-dimensional space, an SVM will construct a separating hyper plane in that space, one which maximizes the "margin" between the two data sets. To calculate the margin, we construct two parallel hyper planes, one on each side of the separating one, which are "pushed up against" the two data sets. Intuitively, a good separation is achieved by the hyper plane that has the largest distance to the neighboring data points of both classes. The hope is that, the larger the margin or distance between these parallel hyper planes, the better the generalization error of the classifier will be. |

|

SSIP 2008 16th summer school on Image processing July 7th -16th 2008 Vienna |